Politico reports that the acting head of CISA uploaded internal government documents marked “for official use only” to ChatGPT, triggering security concerns.

The acting head of the U.S. Cybersecurity and Infrastructure Security Agency (CISA) uploaded sensitive internal government documents to ChatGPT, triggering security alerts and an internal review, according to a report by Politico.

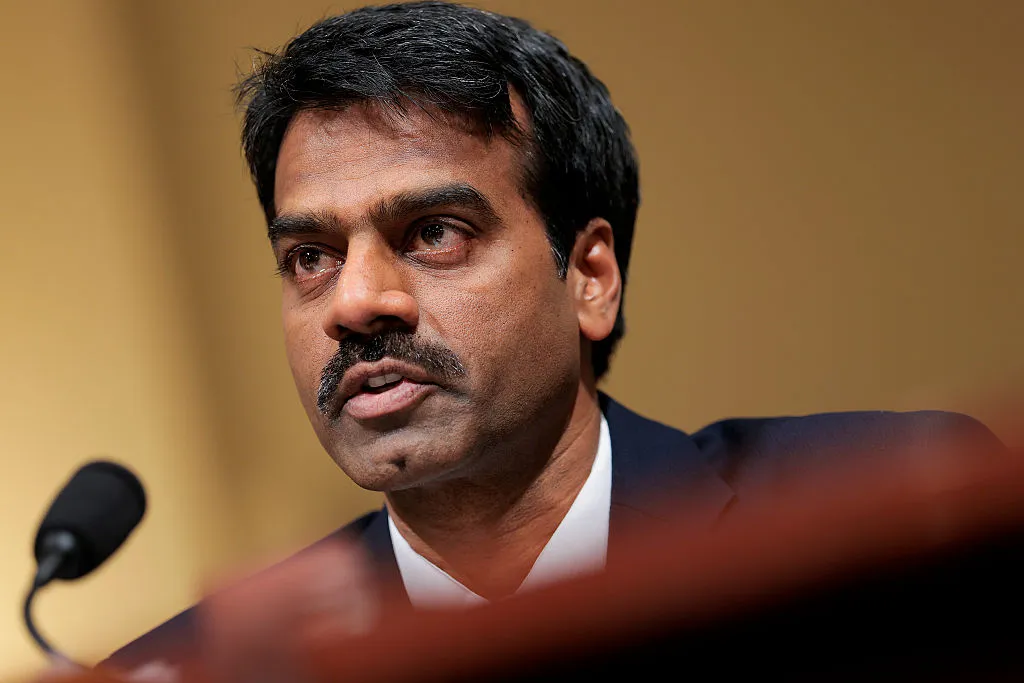

The outlet reported Tuesday that Madhu Gottumukkala, CISA’s acting director and a Trump appointee, uploaded contracting documents marked “for official use only” to the public artificial intelligence platform. Officials said the uploads set off multiple automated security warnings designed to prevent unauthorized disclosure of government information from federal networks.

According to officials familiar with the matter, Gottumukkala had previously been granted a special exception to use ChatGPT early in his tenure, at a time when most CISA employees were prohibited from accessing the platform. The Department of Homeland Security (DHS), which oversees CISA, later initiated an assessment to determine whether the uploads posed any risk to government security.

While the documents were not classified, experts note that uploading internal government materials to public large language models can be problematic. Such systems may retain or learn from the information, potentially increasing the risk of inadvertent disclosure or reuse by other users.

A CISA spokesperson told Politico that Gottumukkala’s use of ChatGPT was “short-term and limited,” and did not elaborate further on the nature of the materials uploaded or the outcome of DHS’s review.

Before joining CISA, Gottumukkala served as chief information officer for the state of South Dakota under then-governor Kristi Noem. Following his appointment to the federal cybersecurity agency, he reportedly failed a counterintelligence polygraph examination. Homeland Security later described that test as “unsanctioned.”

Subsequently, Gottumukkala suspended six career CISA staff members from accessing classified information, a move that drew internal scrutiny and concern among agency personnel, according to prior reporting.

The incident adds to broader questions about the use of artificial intelligence tools within federal agencies, particularly those tasked with safeguarding sensitive government and national security information.